2 Papers Accepted in ISCAS 2026

1) Graph based Methodology for Dynamic KV caching

2) Bin Based FP8 MAC for Edge AI

Guest Talk: Intro to HPC

Delivered a technical session on High-Performance Computing architectures and parallel scaling at Acharya Institute of Technology ↗.

RTL Design Engineer

SandLogic ↗Working on low-precision floating point formats (FP8 E4M3/E5M2, NVFP4) for AI acceleration hardware.

Hardware Intern

Jaitra Inc ↗Hardware Prototyping of RISC-V Core (Black Parrot RV64GC) on PYNQ-Z2 & ZCU104. Deployed N-gram language models on FPGA.

Junior Research Fellow

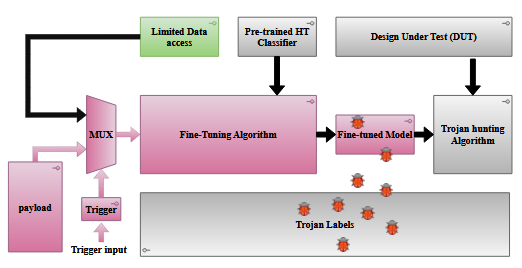

DRDO ↗Project: "Development of Hardware Trojan Testing Methodology". Researched security vulnerabilities in IC supply chains.

M.Tech in VLSI Design

IIT Jodhpur ↗Master's Thesis: ML Accelerators for NLP Applications. Link to Thesis

Teaching Assistant: Hardware for AI ,Hardware-Software Co-Design , ASIC LAB.

CGPA: 9.02

GPU Memory Fetch Estimation Engine

Design and modeling of a GPU memory access estimation framework to analyze global, shared, and cache-level fetch behavior. Enables bandwidth utilization analysis, latency prediction, and memory bottleneck identification for high-throughput GPU workloads.

Zero-Shot Attention Unit

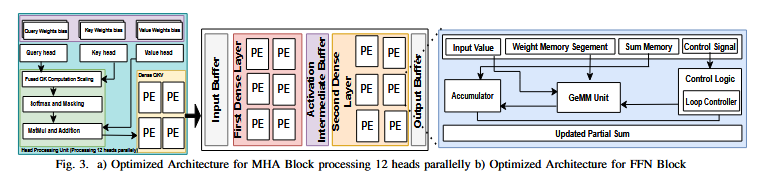

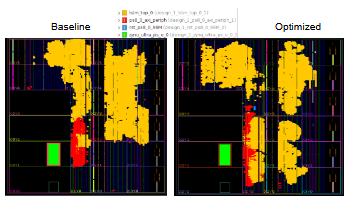

Architected a hardware-efficient self-attention block for GPT-2. Reduced memory footprint by 40% using custom weight-shuffling logic and CORDIC activations.

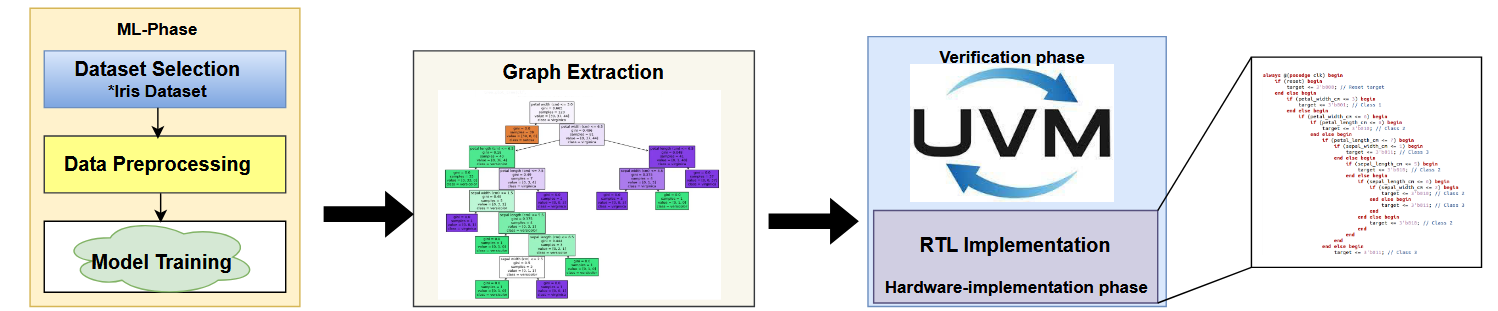

Evaluating Functional Coverage of Accelerator Design for DT-based ML Implementations

A Methodology for Security-Aware Lightweight Deep Neural Network Acceleration

Zero Shot Attention based GPT-2 Accelerator for Resource-Constrained Embedded Platform

Resource-Efficient LSTM Architecture for Keyword Spotting with CORDIC-Activation

A Reconfigurable Floating-Point Compliant Hardware Architecture for Neural Networks

Secure Federated Learning for Gate-Level IP Hardware Trojan Detection Using Homomorphic Encryption

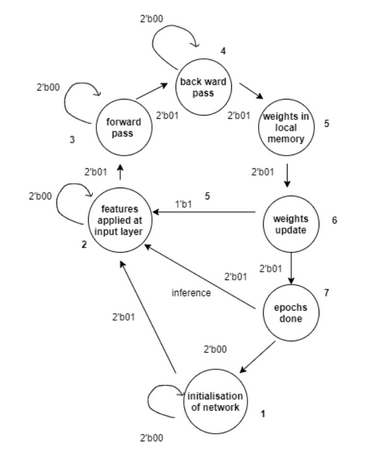

Make Your Neural Network Hardware Accelerator Part-1

Accelerating machine learning models on custom hardware is the future of AI deployment.

How fast is Hardware?

I ran the same code on Custom Hardware and Software and here is the benchmarking result.

Simulate Your Neural Network Accelerator on x86

A framework for simulating end-to-end deep neural networks (DNNs) on custom SoC.

Implementing Sigmoid Activation Function in Hardware

Activation functions are the secret sauce that allows neural networks to learn non-linearity.

APB Bus Design and Verification with COCOTB

From RTL to Fully Tested in Minutes using SystemVerilog and Python.

Running Caffe Models on NVDLA Accelerator

A practical guide to NVIDIA’s open-source Deep Learning Accelerator using Docker.

Building Your Own FPU

How Computers Actually Do Math: From 0.1+0.2≠0.3 to working floating point hardware.

FPGA Acceleration: CORDIC Algorithm

Implementing the CORDIC algorithm with AXI-Stream and PYNQ.

Decision Tree in Silicon: Verilog

Implementing predictive models in hardware using Verilog.

AES Implementation in Verilog

Revolutionizing Data Security: A Beginner’s Guide to AES Implementation.

Background

I am an RTL Design Engineer with a strong foundation in digital systems, specializing in AI accelerators and secure hardware architectures. My work spans from prototyping RISC-V cores on FPGAs to implementing efficient floating-point arithmetic for neural networks.

Currently at SandLogic, I am diving deep into low-precision arithmetic formats like FP8 and NVFP4 to push the performance boundaries of inference engines.

Philosophy

I believe that great hardware is defined by the balance between area, power, and security. My approach combines rigorous verification methodologies with innovative architectural optimizations to create silicon that is not just fast, but reliable and efficient.

Have questions or opportunities?

I'm always open to discussing hardware design, research, or new collaborations. Drop me an email or chat on Discord.

कर्मण्येवाधिकारस्ते मा फलेषु कदाचन ।

मा कर्मफलहेतुर्भूर्मा ते सङ्गोऽस्त्वकर्मणि ॥

Bhagavad Gita 2.47

"You have a right to perform your prescribed duty, but you are not entitled to the fruits of action. Never consider yourself the cause of the results of your activities, and never be attached to inaction."